Say you have a divorced couple that are currently discussing over the phone how to divide up their possessions. They pretty much split everything equally, till they reach their car. They know they won't get nearly as much as it's worth by selling it, so they'd prefer if one of them would just keep it, but they can't agree to which one. They don't want to meet and they don't want to get another party involved. How can they determine which one should get the car?

They can flip a coin on it, but since this is over the phone, both parties won't be able to see it and agree. Ideally one side would decide what heads and tails means, and the other would say if it is heads or tails, but they need a secure method to transfer these secrets to each other, without either end fearing of some sort of compromise.

This problem is what today's public/private key secure communication technology is based upon, and we have come up with a solution.

One party would take two very large prime numbers, and multiply them and transfer this number to the other party. The first party keeps the two factors as a secret, the other party has no reasonable way to determine the two factors without months of computation. The first party then informs the second party that once the coin is flipped, they will take the larger factor, and look at it's fifth digit from a particular end. If that digit is odd it means the first party chose heads and the other tails, and if it is even, the winning conditions will reverse. Now the second party can flip the coin and report the status back to the first party.

At this point, everything needed to determine the winner has been transferred securely, now the secret can be transferred to decode who the winner was. The first party will tell the second what the two factors were, and they will have reached an agreement on who will retain the car.

Now provided that the duration of this transaction (phone call) was shorter than the time it would take for the second party to factor, and everything was done in the proper order, this entire exchange should be 100% secure. However, what if the second party happened to know that number already, and had the factors on hand? That party could cheat, and tamper with the exchange of information, rendering the transaction insecure.

Now when we look at secure communication technology today, they generally have each side come up with their own variation of the prime number examples above, and each side sends the other a "public key" to match with their own secret "private key". The private key can't be easily derived from the public key, but one will decode data encrypted with the other. Then when transferring, each side encodes and decodes data with their private and the other's public keys. An attacker can't jump in the middle, because it would need to get the private key which is never distributed in order to decode or impersonate one of them.

This security falls apart when the keys in use are older than their cryptographically secure time frame, or when the application doesn't follow the proper procedures. If keys are long and strong enough, and always replaced before their safe time frame limit approaches, and connections aren't opened indefinitely, one should be safe in relying upon the keys. However, a chain is only as strong as it's weakest link. If the application doesn't follow the proper procedures for key exchange, or has errors in its authentication and validation routines, it could be leaving it's users and their data compromisable.

If your security application is closed source, there is the chance that there is a backdoor programmed into it that will go unnoticed. When a company has few employees in comparison to the sheer amount of code it has in its products, there is little to stop one rouge employee to stick a tiny bit of code into a complex bit of security related code. When the amount of code greatly outnumbers the coders, very few people will bother to look and try to envision all the circumstances a bit of tricky code will have to handle and how it will end up handling it.

Consider how someone hacked into the Linux server and tried to embed a backdoor into the source that would later make up Linux releases around the world, read about it here. They inserted a bit of code that if two rare options were used together, an unprivileged user would gain administrator capabilities on that machine. Such a thing would go unnoticed if the person had the ability to modify the source at will without raising any eyebrows.

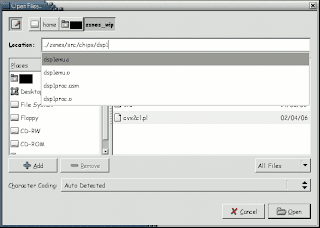

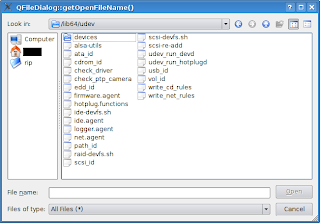

Let me offer an example. The SSH program allows a user to connect to a computer running an SSH server. When one wishes to connect to the other, they have to supply a user name and password, as well as tell SSH which modes to use.

Here are two existing options:

-1 Forces ssh to try protocol version 1 only.

-2 Forces ssh to try protocol version 2 only.

No person would normally consider to force SSH to try everything it was going to try anyway. Imagine if the developer of an SSH server stuck in a bit of code that if the client only wanted protocol version 1 and only wanted protocol version 2, to grant access even if the password was incorrect. This rouge developer could then gain access to every machine running his SSH server, and no one the wiser. Once this developer knows few at his company will see his work, he has nothing to lose to add such code. If he happens to be caught, and if the code was in a confusing section, he can say he was trying to handle an invalid case, and apparently didn't do it right, it was only an accident.

When your security program is closed source, do you really want to lay the security of your data in the hands of a disgruntled employee somewhere? Can you really trust the protection of walls that you can't see and has no outside review process? Many internal reviews miss things in tricky sections, especially when the group in question takes pride in their work. I'm reviewing code, and thinking to myself, hey we wrote this, we're world class programmers, we're good at what we do, this code is too tricky to really sit down with a fine tooth comb, I'm sure it's right.

Keep in mind though, just because something is open source, doesn't mean something can't be snuck in either. It's just less likely for that malicious code to remain there for too long if the application/library in question is popular and has enough people reviewing it.

But despite something being open source, malicious code can be snuck in without anyone ever noticing, read this paper for background. Now while the attack described where one edits the compiler to recognize certain security code and handle it in a malicious manner is a bit far fetched, similar attacks a bit closer to home are quite possible. If you're using a Linux distro which offers binary packages, what really stops a package maintainer from compiling a modified application and putting that in the distro's repositories? Those running a secure environment may want to consider compiling certain packages themselves and not trusting binaries that we really have no clue what is in them.

But based on this paper, do we have to worry that the compiler or the OS or other libraries would produce the proper binary when we compile this security application ourselves?

Lucky for us, despite what newbie programmers want, our programming languages aren't made up of a series of very high level functions such as compile(), handle_security(), and the like. Such would make it much easier for someone to make the compiler or the library do something malicious when it encounters such a request in the source. In order for such an attack to be really successful, it would have to understand every bit of code it's compiling to make sure the resulting program won't be able to detect the trojan, which is extremely tricky if not near impossible for a compiler to do. Not using a high level compiler or virtual machine gives us a layer of security in that it would be harder for one to pass out an "evil compiler" that would understand what the developer was trying to do and instead have it do something malicious.

But if such an attack were to take place, we'd have to pull out hex editors and disassemblers to see that such code has been snuck in (something which we must do with closed source applications). Take this a step further, it is theoretically possible if the OS were affected, or if the compiler was so smart that it intimately understood that it was compiling a hex editor or disassembler and the like to stick in code that would subvert file reading on executables and libraries to mask such malicious code even in the binary.

Now while some clever guy out there is thinking to himself: "Oh I'm going to do that, it'll be completely undetectable", such a course of action is much much easier said than done. I would be amazed if even a whole team of programmers would be able to pull such a monumental task off. I wouldn't worry too much that such magical wool has been invented to pull over our eyes when we try to decode a binary in a safe environment.

But I would worry if the application or the libraries it depends on are closed source. And even if we have the source, I would question where the binary we happen to be using comes from. If you're using even an open sourced application in something critical, I would advise to have your binary for your application and related libraries examined in a safe environment just to be sure. I just hope no one subverted an OS out there to alter non executable reading and writing on executable files, and have the OS strip/readd code when executable files are transfered.